Why Enterprises Should Choose a Third-Party Knowledge Management Platform (KMP) Instead of Building In-House

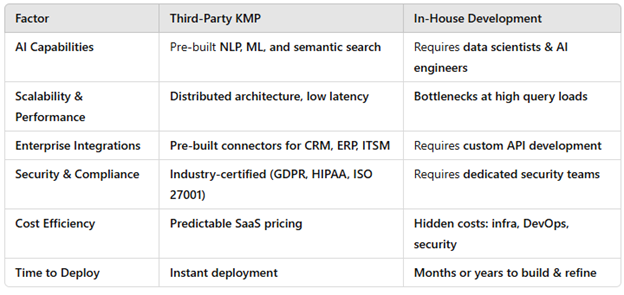

A common question among CIOs and IT leaders when discussion AI strategies is whether to build a custom Knowledge Management Platform (KMP) in-house or to leverage a third-party enterprise-grade solution. While the appeal of full control, customizability, and cost savings may drive organizations toward an in-house approach, the complexity and ongoing maintenance required for a robust AI-driven KMP make third-party solutions the far superior choice for most enterprises. Below, we analyze the critical factors that influence this decision from a technical, operational, and financial perspective.

1. Complexity of AI-Driven Knowledge Architecture

Modern Enterprise Knowledge Management Platforms (KMPs) are not just document repositories—they involve sophisticated AI and machine learning components to structure, optimize, and deliver knowledge in real-time. To replicate a third-party platform’s capabilities, an in-house solution would require extensive investment in:

A. Natural Language Processing (NLP) and Semantic Search

- Challenge: Standard enterprise search engines rely on keyword matching, often returning irrelevant or incomplete results.

- Third-Party Advantage: AI-powered KMPs use semantic search, entity recognition, and intent detection to understand the context behind a query, significantly improving search accuracy.

- In-House Challenge: Developing and training proprietary NLP models would require expertise in data science, computational linguistics, and ML infrastructure—fields that most enterprises are not equipped to handle internally.

B. Knowledge Graphs and Ontologies

- Challenge: Structuring enterprise knowledge in a way that machines can interpret requires building a knowledge graph—a complex, interlinked representation of business rules, processes, and terminologies.

- Third-Party Advantage: Enterprise-grade KMPs come with pre-built, domain-specific ontologies (informative article on data Ontology) that dynamically structure knowledge.

- In-House Challenge: Designing and maintaining an evolving ontology and taxonomy that correctly maps relationships across enterprise-wide data sources is a long-term, resource-intensive initiative.

C. AI-Powered Content Curation and Maintenance

- Challenge: Keeping knowledge bases up-to-date is manual and prone to errors in homegrown systems.

- Third-Party Advantage: AI-driven KMPs use machine learning algorithms to automatically detect outdated, redundant, and conflicting information, suggesting updates or archiving content based on real-world usage.

- In-House Challenge: Implementing AI-driven content validation requires substantial expertise in machine learning, content lifecycle management, and automated version control.

2. Scalability and Performance Optimization

An enterprise KMP must be able to ingest, index, and retrieve massive volumes of knowledge while delivering real-time responses to users. Scalability challenges include:

A. Handling High Query Volumes with Low Latency

- Third-Party Advantage: Vendor solutions employ distributed indexing, AI-enhanced caching, and scalable microservices architectures to handle enterprise workloads.

- In-House Challenge: Without the right data infrastructure (e.g., Elasticsearch clusters, vector databases, and memory-optimized compute layers), homegrown systems quickly become bottlenecks under heavy query loads.

B. Real-Time Knowledge Delivery Across Channels

- Third-Party Advantage: Modern KMPs seamlessly integrate with chatbots, customer service portals, internal enterprise tools, and mobile applications.

- In-House Challenge: Building and maintaining APIs, SDKs, and multi-channel knowledge delivery mechanisms is an ongoing challenge that requires dedicated DevOps and integration teams.

3. Integration with Enterprise Systems

Most organizations operate in a complex IT landscape with multiple tools, including:

- Customer Relationship Management (CRM) (e.g., Salesforce, HubSpot)

- Enterprise Resource Planning (ERP) (e.g., SAP, Oracle)

- IT Service Management (ITSM) (e.g., ServiceNow, Zendesk)

- Collaboration & Communication Tools (e.g., Slack, Microsoft Teams)

A. Pre-Built Enterprise Connectors

- Third-Party Advantage: Leading KMPs provide out-of-the-box connectors to seamlessly integrate knowledge into enterprise workflows.

- In-House Challenge: Developing custom integrations with existing enterprise platforms would require significant time and resources.

B. AI-Enhanced Workflow Automation

- Third-Party Advantage: Vendor solutions automate knowledge workflows (e.g., auto-suggesting knowledge articles for customer service agents).

- In-House Challenge: Manually building AI-driven decision trees and workflow automations is complex and requires constant optimization.

C. Highly Available (HA) Architecture

- Third Party Advantage: Leading KMPs have built multiple instances of their platfoms across hyperscalers using provider grade HA architectures, leveraging economies of scale.

- In-House Challenge: Customers can focus on connectivity to the platform using Tier 1 ISPs in a resilient design, letting their KMP supplier focus on uptime and scalability of the platform.

4. Security, Compliance, and Governance

For industries like finance, healthcare, and government, compliance with regulatory frameworks such as GDPR, HIPAA, and ISO 27001 is non-negotiable.

A. Enterprise-Grade Security & Compliance in the AI era

- Third-Party Advantage: Top vendors provide:

- Role-based access controls (RBAC)

- Data encryption (at rest and in transit)

- Audit logs and compliance tracking

- In-House Challenge: Custom-built systems must implement secure authentication (OAuth, SSO, multi-factor authentication), data governance policies, and compliance tracking, requiring dedicated security teams.

B. Disaster Recovery & High Availability

- Third-Party Advantage: Vendors offer 99.99% uptime SLAs, built-in redundancy, and real-time failover mechanisms.

- In-House Challenge: Enterprises must maintain replication strategies, failover environments, and active monitoring, adding significant operational overhead.

5. Long-Term Cost Considerations

While building in-house may seem cost-effective initially, long-term costs escalate due to:

A. Development & Engineering Costs

- Homegrown systems require hiring data scientists, NLP engineers, AI model trainers, DevOps specialists, and compliance experts.

- Vendor solutions eliminate the need for ongoing development and maintenance.

B. Maintenance & Support Overhead

- Building a KMP is not a one-time project—it requires constant updates, security patches, and AI model retraining.

- Third-party solutions handle upgrades, bug fixes, and enhancements without requiring internal resources.

C. Total Cost of Ownership (TCO)

- Hidden costs of homegrown solutions include server costs, API management, monitoring tools, and security infrastructure.

- Third-party solutions offer predictable pricing models (SaaS subscriptions, enterprise licensing).

Final Verdict: Why Enterprises Should Choose a Third-Party KMP

The Bottom Line

For enterprises looking to deploy an AI-driven Knowledge Management Platform, a third-party solution offers faster implementation, enterprise-grade security, lower maintenance overhead, and superior AI capabilities.

While in-house development may seem appealing, the time, expertise, and resources required to build and sustain a competitive platform far outweigh the cost of leveraging an established vendor solution.

For CIOs and decision-makers leading their enterprise AI Center of Excellence, the strategic choice is clear: partner with a specialized KMP provider to maximize AI-driven knowledge efficiency while focusing internal resources on core business priorities. The team at Macronet Services can offer critical guidance on your journey – please reach out for a conversation about how we can help!

Recent Posts

- Cato Networks Deep Dive: A Technical SD-WAN & SASE Architecture Guide for Senior Network Engineers

- ROI of AI: How Network Bottlenecks are Wasting 30% of Your GPU Investment

- What is an AI Readiness Assessment? The 2026 Executive Guide to Enterprise Scaling

- The Complete Oracle FastConnect Guide: Architecture, Routing, Security, and Enterprise Connectivity Design

- Telecom Expense Management (TEM): The Definitive Guide for Mid-Large Enterprises

Archives

- January 2026

- December 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- December 2020

- September 2020

- August 2020

- July 2020

- June 2020

Categories

- Clients (12)

- Telecom Expense Management (1)

- Satellite (1)

- Artificial Intelligence (9)

- Travel (1)

- Sports (1)

- Music (1)

- News (284)

- Design (4)

- Uncategorized (1)

- All (19)

- Tips & tricks (25)

- Inspiration (9)

- Client story (1)

- Unified Communications (196)

- Wide Area Network (310)

- Cloud SaaS (60)

- Security Services (71)