Cato Networks Deep Dive: A Technical SD-WAN & SASE Architecture Guide for Senior Network Engineers

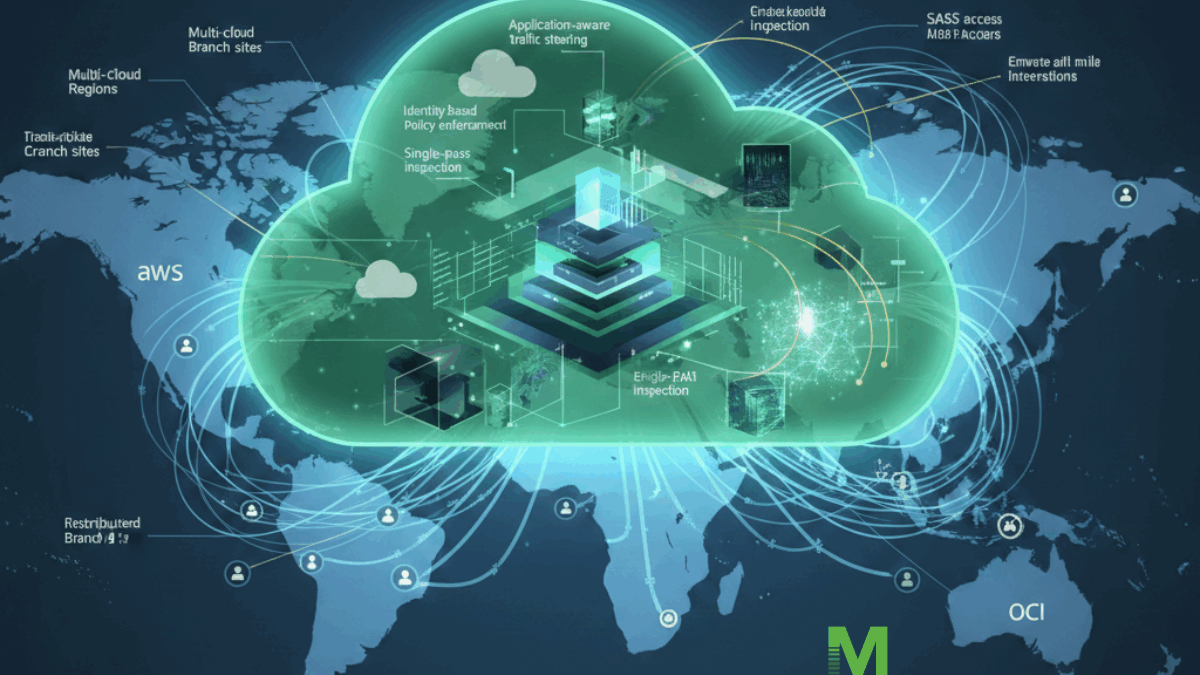

Cato Networks is best understood as a cloud-native WAN + security service delivered from a globally distributed SASE cloud rather than as a traditional “appliance SD-WAN” product with optional security add-ons. In practice, you deploy Cato Sockets (physical or virtual) at edges (branches, DCs, clouds) that establish encrypted tunnels into the Cato Cloud, where traffic is routed across Cato’s backbone and inspected/enforced by a single-pass security engine.

This article is written for engineers evaluating SD-WAN offerings and focuses on how Cato behaves under the hood: dataplane, HA/recovery, routing, traffic steering, security inspection, China connectivity, and cloud on-ramps for multi-cloud.

Table of contents

- Where Cato fits in the SD-WAN/SASE landscape

- Core architecture: Socket → PoP → backbone → security engine

- Underlay, tunnels, and resiliency: DTLS, PoP selection, failover, SLAs

- SD-WAN functions: steering, QoS, packet-loss mitigation, acceleration

- Routing & segmentation: network rules, dynamic routing (BGP), policy model

- Security stack (SSE 360): SWG, FWaaS, IPS/NGAM, ZTNA, CASB/DLP, RBI, DNS

- The Single-Pass Cloud Engine (SPACE): why it matters operationally

- Management, observability, APIs, Terraform, SIEM/SOAR integrations

- Multi-cloud connectivity: Cloud Interconnect (AWS/Azure/GCP/OCI) + vSockets + IPsec

- China connectivity: practical architecture patterns and compliance realities

- A technical evaluation checklist for SD-WAN bake-offs

1) Where Cato fits in the SD-WAN/SASE landscape

When engineers say “we’re evaluating SD-WAN,” they’re often comparing architectural philosophies, not just features. Cato’s model is distinct enough that you should frame your evaluation around where the dataplane and security enforcement actually live.

1.1 Three common SD-WAN archetypes (and where Cato lands)

- A) Appliance-centric SD-WAN (overlay-first, security optional)

- Branch appliances build tunnels to other branches or regional hubs.

- Security is either local (NGFW on the box) or bolted-on via service-chaining to a cloud security provider.

- Upside: predictable local breakout, deep per-site controls.

- Tradeoff: multi-vendor stitching, policy fragmentation, and “security path” complexity.

- B) SD-WAN + SSE (two-tier, integrated in UI but not necessarily dataplane)

- SD-WAN overlay exists, but Internet/SaaS security typically happens by steering traffic to an SSE provider.

- Still ends up with two policy planes and often two sets of logs/objects.

- C) Cloud-native SASE (network + security delivered from a provider cloud) — Cato’s approach

- The provider PoP is the network hop and the security enforcement point.

- The branch device is mainly a tunnel endpoint + local forwarding/HA element, not “the place where most security happens.”

- WAN optimization and security inspection are designed to occur in the same place (the PoP), using one inspection pipeline.

That last point matters: if your organization expects “SD-WAN box does the routing and security locally, cloud is optional,” then Cato will feel different. Conversely, if your strategic goal is “reduce boxes and consolidate network+security operations,” Cato maps cleanly.

1.2 The key differentiator: the PoP is your transit hub (not your data center)

In many legacy WANs, the “center” is:

- MPLS core,

- regional DC,

- or your own hub-and-spoke termination (DMVPN, SD-WAN hubs, etc.)

In Cato, the PoP is the hub:

- Branch/user/cloud edges establish tunnels to the closest/optimal PoP.

- From there, traffic either:

- traverses the provider backbone to another PoP (for site-to-site / cloud-to-site), or

- exits to the Internet/SaaS from a PoP egress region.

This is why Cato is often evaluated as “SD-WAN + private backbone + SSE in one service” rather than a pure edge SD-WAN.

Practical implication: your app performance and security posture depend heavily on:

- PoP density near your sites/users,

- where egress occurs (geo),

- and how quickly the platform reacts to last-mile degradation.

1.3 What “converged” means operationally

A lot of vendors say “integrated.” The engineer question is: integrated where, and to what depth?

With a converged SASE model like Cato’s, you’re aiming for:

- one identity/app classification pipeline

- one security inspection pipeline

- one policy object model

- one logging/export model

Cato’s single-pass engine (SPACE) is explicitly positioned as the mechanism that lets multiple controls (SWG, IPS, DLP, ZTNA, etc.) be applied coherently without repeatedly decrypting/decoding/reclassifying flows.

Why this matters in real life:

- fewer policy collisions (“SWG allowed it but FW blocked it”),

- fewer visibility gaps between features,

- less service-chaining hairpin latency,

- and simpler incident response because events share consistent metadata.

1.4 Where Cato overlaps SD-WAN vs where it’s more than SD-WAN

If your scorecard is still SD-WAN-only, you’ll cover:

- link aggregation / dual uplink resiliency

- app-aware steering

- QoS and prioritization

- overlay encryption and segmentation

- dynamic routing integration (BGP) for DC/cloud

Cato can check those boxes—but it’s usually chosen because the security and remote access are not add-ons:

- remote users get the same policy model as sites (vs separate VPN + separate security stack),

- Internet/SaaS traffic can be inspected at the PoP with consistent controls,

- and you can treat branches, cloud VPCs/VNETs, and users as part of one policy domain.

So in an eval, don’t just ask “is the SD-WAN good?” Ask:

- “Does it reduce the number of architectural components we run?”

- “Does it reduce the number of policy planes we maintain?”

- “Does it improve day-2 operations (logging, incident response, automation)?”

1.5 The mental model to use during your bake-off

Think in terms of “traffic domains” and “enforcement points”:

- Branch ↔ Branch / Branch ↔ DC / Branch ↔ Cloud

- How deterministic is the path across the provider backbone?

- How does failover behave during brownouts?

- Branch ↔ SaaS / Internet

- Where does egress happen (geo)?

- Can you enforce consistent web controls without tromboning to HQ?

- User ↔ SaaS / Private apps

- Is the remote-user experience “bolted on VPN,” or truly the same policy framework?

- Cloud ↔ Cloud / Cloud ↔ Branch

- Are cloud on-ramps first-class (interconnects/vSockets), or just IPsec tunnels?

Cato’s promise is that these domains are handled coherently by the same PoP cloud and policy engine.

2) Core architecture: Socket → Cato PoP → global private backbone → destination

2.1 Cato Sockets (physical + virtual)

Cato’s edge device is the Socket. Sockets establish DTLS-based tunnels into the Cato Cloud, after which Cato can create a logical mesh and enforce inter-site policy centrally (including WAN firewall policy governing what can talk to what).

Common deployment types you’ll see in real networks:

- Branch Sockets (physical) with dual uplinks (broadband + DIA, DIA + LTE/5G, etc.)

- HA pairs at key sites

- vSockets for cloud footprints (AWS/Azure/GCP), plus specialized cloud on-ramp patterns (below)

- IPsec sites when you can’t or won’t deploy a Socket (e.g., you keep an existing firewall termination)

2.2 PoPs and the Cato backbone

Once traffic hits a PoP, the forwarding decision is no longer “which underlay next-hop do I choose?” but “do I keep this on Cato’s backbone to another PoP or hairpin it to the public Internet?”

This is a design point engineers should test:

- latency/jitter stability across the Cato backbone vs your existing MPLS or DIA paths,

- regional PoP proximity for remote edges,

- and where inspection occurs relative to app destinations.

Cato’s documentation and positioning emphasize a global cloud service applying network optimizations and security consistently from any edge type (sites, cloud, mobile users).

3) Underlay, tunnels, and resiliency: DTLS, PoP selection, Smart SLA, and recovery behavior

3.1 DTLS tunnels and PoP selection

Sockets connect to the Cato Cloud using DTLS tunnels.

Operationally, what you care about is: how fast does an edge recognize last-mile degradation and move to a better PoP/path?

Cato exposes mechanisms like Smart SLA where the system evaluates last-mile SLA metrics and can move tunnels to a different PoP when requirements aren’t met (based on an evaluation window).

3.2 “Last mile” visibility and managed monitoring (ILMM)

Cato also markets/implements Intelligent Last-Mile Monitoring (ILMM) as an operational layer, including NOC involvement and tooling to detect/resolve ISP last-mile issues.

From an engineering standpoint, this is less about the brochure and more about:

- what telemetry you get (loss, latency, jitter per link/tunnel),

- how it correlates to app experience,

- and whether escalation workflows reduce your mean-time-to-innocence with ISPs.

Cato documentation also describes Last Mile Monitoring probes that send ICMP to targets you define to monitor link quality and reachability.

3.3 Recovery models differ by site type

Cato explicitly distinguishes recovery behavior for Socket/vSocket vs other site types, noting Socket/vSocket as the most resilient model for WAN continuity and predictable recovery at critical sites.

This is a good cue for eval: if you plan to deploy IPsec-only at key sites, validate what you give up (failure modes, convergence time, feature parity).

4) SD-WAN functions: steering, QoS, packet-loss mitigation, and acceleration

Cato’s SD-WAN messaging focuses on:

- application prioritization,

- link resiliency,

- packet loss mitigation,

- and TCP acceleration.

4.1 Policy-based steering (Network Rules)

Cato implements traffic steering via Network Rules (policy-based routing + prioritization). These rules can steer traffic by application/source/destination and “optimize for latency/packet loss” while selecting transport paths.

What to test in a bake-off:

- Can you create deterministic steering for a given app (e.g., VoIP, VDI, SaaS)?

- Are rules evaluated with sufficient “app ID” fidelity (DPI classification accuracy)?

- Can you express “use backbone vs Internet” decisions cleanly?

- How does policy interact with segmentation and security policy?

4.2 Packet loss mitigation (duplication technique)

Cato documents Packet Loss Mitigation that uses packet duplication (on multi-tunnel links) to mitigate last-mile packet loss, specifically for Cato Cloud transport links.

Engineers should validate:

- which traffic classes get duplication (all? only selected apps? only above thresholds?),

- bandwidth overhead tradeoffs,

- behavior under asymmetric loss,

- and whether the mitigation triggers flap avoidance or causes it.

4.3 Application experience

Cato highlights TCP acceleration as part of the SD-WAN value proposition.

You’ll want to measure:

- long-fat-pipe throughput for inter-region flows,

- performance with moderate loss/jitter on one link,

- and the behavior of latency-sensitive flows during brownouts.

5) Routing & segmentation: BGP, IPsec sites, and “identity-aware” policy concepts

5.1 IPsec sites for clouds or third-party firewall edges

Cato supports IPsec tunnels (IKEv1/IKEv2, recommends IKEv2) and calls out typical IPsec-site use cases such as public cloud sites and offices that use a third-party firewall.

5.2 Dynamic routing (BGP)

Cato supports BGP neighbors for IPsec connections, and its docs walk through how to define private IPs inside the tunnel and configure neighbor pairs.

There’s also guidance on preparing to implement BGP with Cato and a note that available BGP features differ for Socket sites vs IPsec sites—important if your architecture mixes both.

5.3 Segmentation & policy model

Cato frames segmentation as part of the SPACE engine capabilities (flow control/segmentation via NGFW).

In practice, your segmentation questions are:

- Is segmentation object model site-based, user-based, app-based, or all of the above?

- How do you express “east-west” constraints between branches and cloud VPCs/VNETs?

- Where is the enforcement point (PoP vs edge) for inter-site flows?

6) Security stack (SSE 360): converged controls, evolving inspection domains, and why SASE becomes the enforcement plane

Cato’s SSE 360 should be understood less as a checklist of security features and more as a policy and inspection framework that is designed to expand as new traffic classes emerge. Today, that framework covers the familiar domains—web, SaaS, private applications, and east–west WAN traffic—but Cato’s roadmap and recent acquisition activity make it clear that the company views AI interactions as the next major domain to be governed by the same SASE control plane.

At a functional level, SSE 360 already converges SWG, FWaaS/NGFW, IPS, NGAM, DNS security, CASB, DLP, RBI, and ZTNA into a single cloud-delivered service. The operational benefit for engineers is not simply fewer vendors, but fewer policy seams: one identity model, one application classification pipeline, and one enforcement context regardless of whether traffic originates from a branch, a cloud workload, or a remote user.

This convergence becomes more important as traffic patterns grow less deterministic. Modern enterprise traffic is no longer just “user to SaaS” or “branch to DC.” It increasingly includes API-driven services, background SaaS integrations, and now AI-driven workflows where data is embedded in prompts, tool calls, and generated outputs. Traditional security controls struggle here because they were designed to inspect files, sessions, or well-defined SaaS transactions—not conversational, semi-structured data flows.

Cato’s position is that SASE is the only realistic place to enforce policy at scale for these emerging patterns, because it already sits at the egress and interconnect points where this traffic converges. That perspective directly sets up the architectural role of the Single-Pass Cloud Engine.

7) The Single-Pass Cloud Engine (SPACE): unified inspection today, extensible enforcement tomorrow (including AI)

Cato’s Single-Pass Cloud Engine (SPACE) is the technical mechanism that enables its “converged” claim to hold up under scrutiny. Rather than chaining multiple virtual security appliances or services together, SPACE performs classification, decryption, inspection, and enforcement once, then applies multiple security decisions to the same flow context.

From a senior engineer’s perspective, this matters less for raw throughput and more for consistency and extensibility. Because SPACE builds a shared metadata model for each flow—identity, application, risk posture, content type—it becomes possible to layer additional controls without redesigning the datapath. This is why Cato can plausibly integrate new inspection domains (such as AI security) without introducing parallel policy engines or logging silos.

This is where the Aim Security acquisition fits naturally.

Aim Security, acquired by Cato in September 2025, focuses on securing AI interactions, AI agents, and AI application runtimes—specifically addressing risks such as sensitive data leakage via prompts, adversarial and indirect prompt injection, policy bypass, and compliance violations tied to AI usage. Cato has been explicit that Aim will remain available as a standalone offering in the near term, with convergence into the Cato platform targeted for early 2026. That timeline is important, but the architectural intent is even more important: AI security is being positioned as another inspection domain inside SPACE, not as an external bolt-on.

This distinction matters operationally. AI traffic often looks indistinguishable from normal HTTPS at the packet level, yet it carries high-value enterprise data in forms that classic DLP and CASB were never designed to interpret. Prompts, model responses, and agent tool calls are neither files nor traditional SaaS objects. Treating them as first-class inspection targets requires an engine that already understands identity, application context, and policy precedence—and can apply those consistently across users, sites, and clouds.

By integrating Aim’s AI-aware inspection logic into SPACE, Cato is signaling that:

- AI interactions will be governed by the same identity- and policy-driven model as web and SaaS traffic.

- AI-related telemetry will align with existing logging and export mechanisms rather than introducing a parallel security dataset.

- Enforcement can occur at the same PoP-based control points where Internet, SaaS, and private-app traffic is already inspected.

For engineers, this has practical implications that go beyond marketing. It suggests a future state where:

- “Sanctioned vs unsanctioned AI tools” becomes a native policy construct alongside sanctioned SaaS.

- Sensitive data controls can be applied not just to uploads/downloads, but to prompt text and generated output.

- AI runtime abuse (for example, prompt injection causing unintended data access) can be detected and correlated with the same user, device, and network context you already use for incident response.

Critically, this also avoids one of the biggest pitfalls of emerging AI security products: fragmentation. Endpoint-only AI controls lack visibility into shared environments. API-only approaches miss real-time enforcement. Application-layer controls require every AI workload to be instrumented. Cato’s strategy is to anchor AI security in the network/security convergence layer, then extend outward where deeper context is required.

From an evaluation standpoint, this means senior engineers should not treat Aim as a standalone checkbox, but as a litmus test for how extensible Cato’s architecture really is. The right questions are not “Does it block ChatGPT?” but:

- How does AI inspection inherit identity, segmentation, and policy precedence from existing SPACE constructs?

- Are AI-related events logged and exported using the same schemas and pipelines as other SSE telemetry?

- Does enabling AI security introduce additional datapaths, latency, or policy ordering complexity—or does it remain a true single-pass extension?

Seen in this light, Sections 6 and 7 describe not just what Cato’s SSE does today, but how the platform is designed to absorb new traffic classes without architectural rewrites. That is ultimately the strongest technical argument Cato makes in the SD-WAN/SASE market—and the Aim acquisition is evidence that the company is betting on that design holding up as enterprise traffic continues to evolve.

8) Management, observability, APIs, Terraform, and SIEM/SOAR

8.1 One console + one API

Cato advertises unified management (“single pane of glass”) and a single API for config/analysis/troubleshooting/integration.

8.2 Infrastructure as Code (Terraform)

Cato provides a Terraform provider to manage resources like Socket sites, IPsec sites, WAN firewall rules, routing policies, and identity integrations.

If you’re a senior engineer trying to standardize deployments, this is a big differentiator vs vendors where IaC is limited or unofficial.

8.3 SIEM and log pipelines

Many SD-WAN evaluations ignore “day 2” logging until late. Don’t.

Cato’s event feeds and API access are commonly used in SIEM integrations; for example, Sumo Logic’s integration workflow references generating API keys and enabling event feeds, and Rapid7’s InsightIDR docs reference Cato API region identifiers and key creation steps.

9) Multi-cloud connectivity: Cloud Interconnect + vSockets + IPsec patterns

Cato is unusually explicit about multi-cloud connectivity in documentation.

9.1 Cloud Interconnect Sites (AWS, Azure, GCP, OCI)

Cato’s “Cloud Interconnect Sites” documentation describes native connections to AWS, Azure, GCP, and Oracle Cloud (OCI).

They publish individual guides for each cloud:

- AWS

- Azure

- GCP

- OCI

What to validate technically:

- Which PoPs support each interconnect use case (primary/secondary) and what the redundancy model is in each cloud guide (Cato notes you should verify support by PoP, cloud provider, and fabric providers).

- Whether you can standardize a single interconnect pattern across clouds or must vary by cloud.

- BGP behavior, route limits, and failover time when interconnects flap.

9.2 vSockets (cloud-native edges)

Cato also supports vSockets in cloud platforms; for example, there’s documentation for deploying a vSocket in GCP via Terraform (useful if you want repeatable cloud edge deployments).

9.3 IPsec for cloud when you keep your own edge/security

If your standard is “we terminate in our firewall/VPN gateway,” Cato still supports IPsec sites (commonly used for public cloud and third-party firewall deployments).

In that model, you should double-check feature parity and whether your security inspection happens in Cato vs locally.

10) China connectivity: realistic architectures, compliance, and performance

China WAN is where marketing claims often break. Cato has published specific guidance and positioning around China connectivity using licensed local partners and in-country PoPs.

10.1 Licensed-partner model

Cato has stated it uses local partners “duly licensed by the Chinese government” to provide connectivity services out of China via authorized providers.

This matters because operating cross-border connectivity in China intersects with licensing and regulatory constraints; many “just use IPsec to Hong Kong” architectures fail at scale or introduce operational/legal risk.

10.2 In-country PoPs and optimized egress

Cato also describes a model with SLA-backed PoPs in Beijing, Shanghai, and Shenzhen, connecting to Cato’s global backbone with optimized egress via Hong Kong.

From a technical standpoint, you should assess:

- where policy enforcement occurs for China-based users (in-country vs offshore),

- what routes are used for in-China SaaS vs out-of-China SaaS,

- how last-mile circuits are procured (domestic circuits vs international),

- and what “breakout” looks like for China-local Internet access.

10.3 Practical evaluation tips for China

For a real-world proof:

- pick 2–3 representative China sites (coastal + inland, different carriers),

- test both directions (China→global apps, global→China apps),

- validate DNS behaviors and SaaS edge selection,

- measure stability over weeks, not hours,

- and confirm the operational responsibility split (your ISP tickets vs Cato/partner involvement).

11) A senior engineer’s evaluation checklist (what to test, not just what to read)

Use this as a structured bake-off plan.

Dataplane & resiliency

- Tunnel establishment type (DTLS) and convergence behavior after underlay loss

- PoP failover triggers and time-to-recover (Smart SLA behavior)

- HA model for key sites and recovery characteristics by site type

SD-WAN behavior

- Rule expressiveness (Network Rules), app classification accuracy, deterministic steering

- Loss mitigation mechanisms and overhead (packet duplication)

- TCP acceleration impact on long-haul and lossy links

Routing & integration

- BGP feature set for Socket vs IPsec, route propagation, failure behavior

- Migration paths: Socket vs IPsec sites, and what you lose with IPsec-only

Security validation

- SSE function coverage and policy order consistency (SWG, IPS, DLP, ZTNA, CASB, RBI, DNS)

- SPACE packet flow and inspection stages (read the packet flow doc during eval)

- Logging completeness and export (SIEM integrations)

Multi-cloud & China

- Cloud Interconnect feasibility per PoP/cloud/fabric and redundancy patterns

- China: partner licensing model, in-country enforcement, and tested performance from real sites

Automation

- Terraform coverage for your intended footprint and lifecycle operations

Closing perspective: when Cato tends to win

Cato tends to win when you want to simplify architecture into a single SASE service that gives you:

- consistent WAN behavior via PoP/backbone,

- consistent cloud security inspection (single pass),

- and consistent management/automation across branch + cloud + remote users.

Conclusion: Independent guidance for real-world SD-WAN decisions

Choosing the right SD-WAN architecture—especially for a global, multi-cloud, security-converged network—is no longer about feature checklists. It’s about understanding how different design philosophies behave under real conditions: last-mile instability, cloud on-ramps, regulatory constraints (including regions like China), security inspection at scale, and day-2 operational complexity.

This is where Macronet Services helps senior network and security teams cut through vendor narratives.

Macronet Services represents most of the leading SD-WAN and SASE suppliers and Tier-1 global ISPs. That combination allows us to evaluate platforms like Cato—and its peers—not in isolation, but as part of an end-to-end global network that includes carriers, cloud providers, security controls, and operational realities.

Because we are vendor-aligned but vendor-independent, our role is not to push a single solution. It’s to help you:

- map business and technical requirements to the right SD-WAN architecture,

- understand tradeoffs between cloud-native SASE models and edge-centric designs,

- validate performance, security, and resiliency assumptions before you commit,

- and design a global WAN that will still make sense as traffic patterns, cloud usage, and AI adoption continue to evolve.

If you’re evaluating SD-WAN options, rethinking your global network strategy, or simply want a technically grounded conversation about what you’re trying to achieve, Macronet Services is always available for an open, engineering-level discussion—no pressure, no sales pitch, just clarity. Please reach out anytime.

Frequently Asked Questions

- What is Cato Networks and how is it different from traditional SD-WAN?

Cato Networks is a cloud-native SD-WAN and SASE platform that delivers networking and security from a global cloud of points of presence (PoPs). Unlike traditional SD-WAN that relies on edge appliances and optional security add-ons, Cato centralizes traffic steering, WAN optimization, and security inspection in its cloud, reducing on-prem complexity and policy fragmentation.

- Is Cato Networks an SD-WAN provider or a SASE platform?

Cato is best described as a full SASE platform with native SD-WAN. SD-WAN is the transport and routing foundation, while security services such as SWG, FWaaS, IPS, CASB, DLP, ZTNA, and DNS security are applied inline within the same cloud enforcement layer.

- How does Cato Networks handle WAN traffic differently than MPLS?

Cato replaces MPLS with encrypted tunnels over Internet underlay, but traffic does not traverse the public Internet end-to-end. Instead, traffic enters the Cato private backbone at the nearest PoP, where routing decisions, optimization, and security are applied before reaching its destination.

- Does Cato Networks use a private backbone?

Yes. Cato operates a global private backbone interconnecting its PoPs. Once traffic reaches a PoP, it can traverse this backbone rather than relying solely on unpredictable Internet paths, improving latency consistency and packet-loss resilience for site-to-site and cloud connectivity.

- How does Cato Networks perform traffic steering and path selection?

Cato uses application-aware network rules combined with real-time link telemetry (latency, jitter, packet loss) to dynamically steer traffic. Engineers can define policies that prioritize specific applications, prefer backbone paths, or optimize for real-time performance metrics.

- What security services are included in Cato’s SASE offering?

Cato’s SSE stack includes secure web gateway (SWG), next-generation firewall (FWaaS), intrusion prevention (IPS), anti-malware, DNS security, CASB, DLP, remote browser isolation (RBI), and zero trust network access (ZTNA)—all enforced through a unified policy engine.

- What is Cato’s Single-Pass Cloud Engine and why does it matter?

Cato’s Single-Pass Cloud Engine (SPACE) inspects traffic once and applies multiple security controls without service chaining. This reduces latency, avoids policy conflicts, and enables consistent logging and enforcement across networking and security functions.

- How does Cato Networks support multi-cloud connectivity?

Cato supports multi-cloud environments through cloud interconnects, virtual sockets (vSockets), and IPsec/BGP integration across AWS, Azure, Google Cloud, and Oracle Cloud. This allows enterprises to connect clouds, branches, and users into a single routed and secured WAN fabric.

- Can Cato Networks integrate with existing firewalls or routers?

Yes. Cato supports IPsec tunnel sites with optional BGP, allowing integration with third-party firewalls, routers, or cloud VPN gateways. However, feature depth and resiliency are greatest when using native Cato Sockets or vSockets.

- How does Cato Networks address connectivity challenges in China?

Cato uses licensed in-country partners and China-specific PoP architecture to provide compliant connectivity. Traffic can be optimized through authorized paths with predictable performance, avoiding the instability and regulatory risk of unmanaged cross-border VPNs.

- Is Cato Networks suitable for global enterprises with remote users?

Yes. Cato treats remote users as first-class network nodes, applying the same security and routing policies as branch sites. Users connect to the nearest PoP, gaining secure access to SaaS, Internet, and private applications without traditional VPN concentrators.

- How does Cato Networks compare to SD-WAN vendors that rely on edge appliances?

Edge-centric SD-WAN vendors place routing and security primarily at the branch. Cato shifts these functions to the cloud, reducing appliance sprawl and simplifying global policy management. This model favors cloud-first, SaaS-heavy, and globally distributed organizations.

- What role does AI security play in Cato’s roadmap?

Cato acquired Aim Security to extend SASE enforcement into AI interactions and AI application runtime security. The goal is to inspect prompts, responses, and AI workflows using the same policy and logging framework already applied to web and SaaS traffic.

- Is Cato Networks a good replacement for MPLS?

For many enterprises, yes. Cato is commonly deployed as an MPLS replacement that delivers comparable reliability with greater flexibility, integrated security, and lower operational overhead—especially for globally distributed and cloud-heavy environments.

- Who can help evaluate whether Cato Networks or another SD-WAN platform is the right fit?

Macronet Services helps enterprises evaluate SD-WAN and SASE platforms across vendors and carriers. As a partner to leading SD-WAN providers and all major Tier-1 ISPs, Macronet Services provides independent, engineering-led guidance for global network design and vendor selection.

Recent Posts

- The Definitive Technical Guide to Securing AI Across a Global WAN

- How to Choose a Technology Advisor in 2026

- The Ultimate Guide to Equinix Competitors in 2026

- Cato Networks Deep Dive: A Technical SD-WAN & SASE Architecture Guide for Senior Network Engineers

- ROI of AI: How Network Bottlenecks are Wasting 30% of Your GPU Investment

Archives

- February 2026

- January 2026

- December 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- December 2020

- September 2020

- August 2020

- July 2020

- June 2020

Categories

- Clients (12)

- Telecom Expense Management (1)

- Satellite (1)

- Artificial Intelligence (10)

- Travel (1)

- Sports (1)

- Music (1)

- News (289)

- Design (5)

- Uncategorized (1)

- All (19)

- Tips & tricks (25)

- Inspiration (9)

- Client story (1)

- Unified Communications (196)

- Wide Area Network (311)

- Cloud SaaS (60)

- Security Services (72)